Neuronal Dynamic

- weight adjustments

The weights must be adjusted within the dendrites, but they must not rely on any logic regarding how many other dendrites the neuron has. In particular, there is no central authority that monitors or computes this. The goal, however, is to implement Hebb's learning rule known as: "Neurons that fire together, wire together." In this case "Dendrites that fire together, wire together."

In this approach, the weight of each individual dendrite is adjusted as follows: the weight regularly receives a stimulus value, and each input stores (overwrites) a timestamp. When the dendrite receives a feedback event, the difference between the timestamps is computed. The quotient of the input feedback divided by this time delta is then added to the weight, which may increase or decrease accordingly. Normalization of the weights is not required. The updated weight is used for the next potential calculation.

-

threshold adjustments

The threshold value must be adjusted to give the neuron a certain degree of agility. That means, the threshold depends on time feedbacks only. The environment sends different time feedbacks: "to late, to early, no result arrived". The threshold is softly balanced by the delta value of these events.

- long-term / short-term memories

This SNN's memory relies on both long‑term (LTP) and short-term (STP) plasticity. In each case, the corresponding values are kept in balance, and incoming feedback is processed in a way that preserves this equilibrium. As mentioned earlier, the environment provides a delta value that indicates how the neuron should adjust its potential. Although STP and LTP operate on different temporal scales, it is the value of each plasticity component that must remain balanced, not the duration itself. The feedback is processed in a way that preserves this balance, regardless of whether the underlying mechanism acts over short or long time spans.

-

neurogenesis

Neurogenesis is driven by neurotransmitters, which exert their influence within what I refer to as the neuron field. In this framework, I make use of attractors within these fields, without distinguishing between individual neurotransmitter types at a technical level. Neurons migrate or extend along chemical gradients that they themselves generate, sending signals back into their respective neuron fields. These fields, in turn, propagate the signal to up to n neurons whose current shortage or surplus is already known.

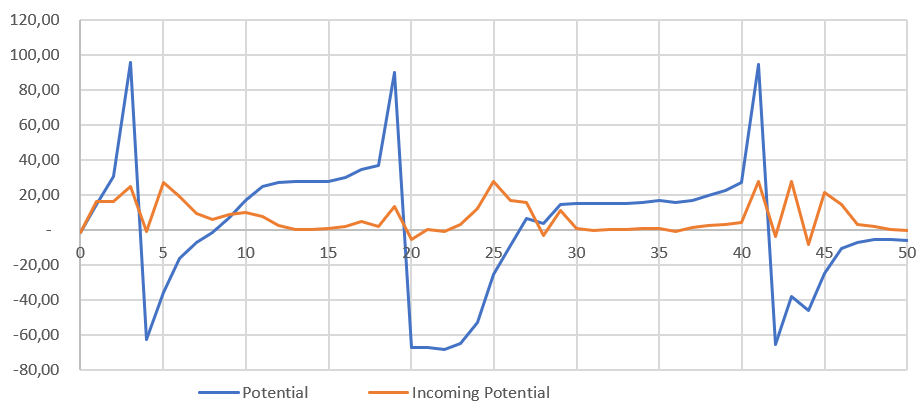

The action potential — that is, the value that is fired — is computed solely from the STP and LTP values. The dendritic input values, by contrast, only determine whether a firing event is triggered or not. The LTP and STP values can be thought of as “reservoirs” from which a portion is drawn to generate an action potential. Only a percentage of the available STP and LTP is used, which allows the model to simulate “fatigue.” Over time, these reservoirs gradually refill, while in parallel the values undergo a long‑term decay. An initial simulation shows the following result:

Simulation Action Potential